It didn’t begin with ambition or excitement. It began with a dull, dragging tiredness the kind that shows up when you open yet another article titled “AI Career Roadmap 2026” and feel absolutely nothing. No motivation, no resistance, just a quiet sense that you’ve seen this before and that it didn’t help last time either.

You already have work. You already have skills. On paper, nothing is broken. And yet something feels slightly misaligned. Every roadmap promises clarity, momentum, and certainty. Learn these tools, follow these steps, and give it six months. Somehow, instead of confidence, you end up with more tabs open and less direction.

That’s where this really starts. Not with learning plans or skill stacks, but with the uncomfortable realization that most advice isn’t wrong, it’s just answering the wrong question. This isn’t a rejection of roadmaps; it’s a correction of what we’ve been calling one.

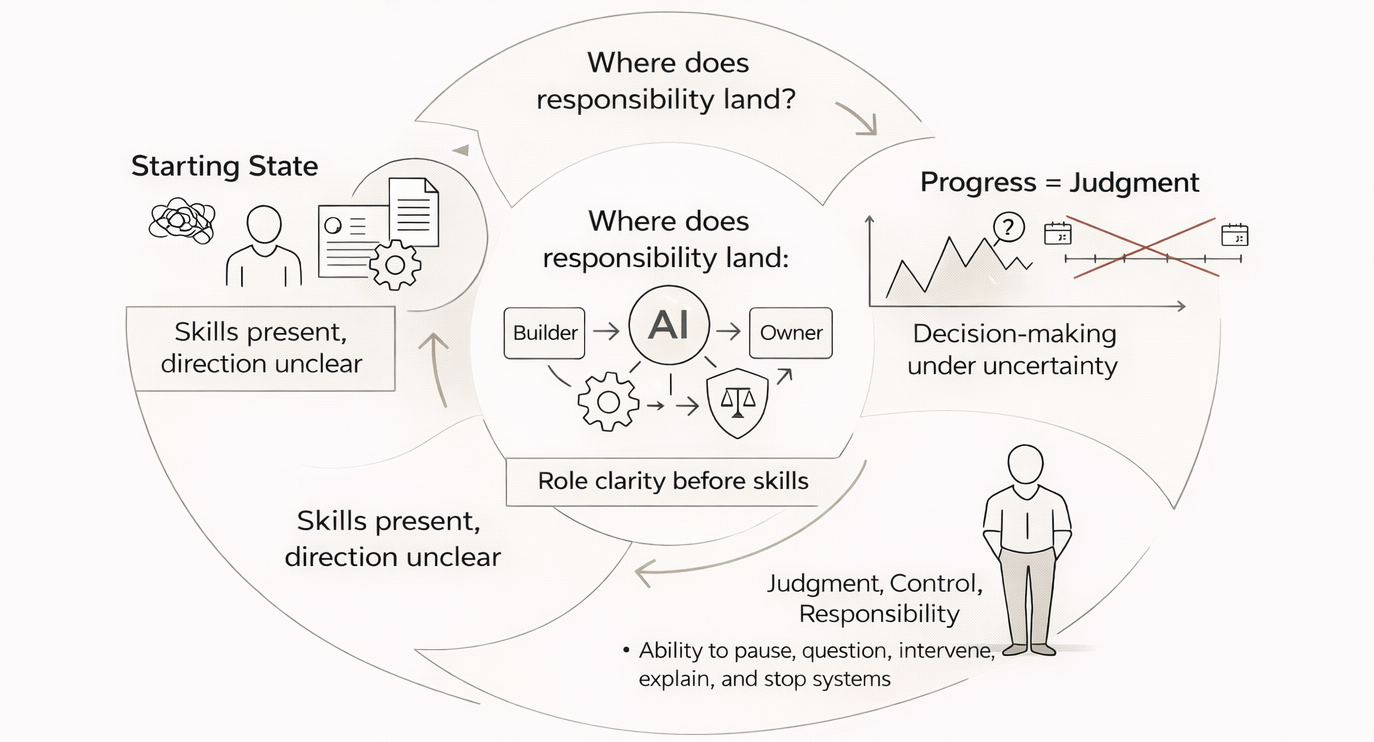

The real issue in 2026 isn’t that people don’t know what to learn. It’s that they don’t know who they are becoming while they’re learning it. Skills accumulate, tools change, progress looks visible on the surface, but the direction underneath remains unclear. And without direction, even improvement starts to feel hollow.

Before going further, it helps to be clear about what this roadmap does and doesn’t try to give you. It won’t hand you a checklist. It won’t tell you which tool guarantees relevance. It won’t compress uncertainty into a neat timeline.

What it does offer instead is orientation a way to decide which direction of responsibility you’re moving toward, a way to make better decisions when things are unclear, and a way to start without pretending you have a blank slate.

That distinction matters more than it sounds.

Role clarity comes before skills

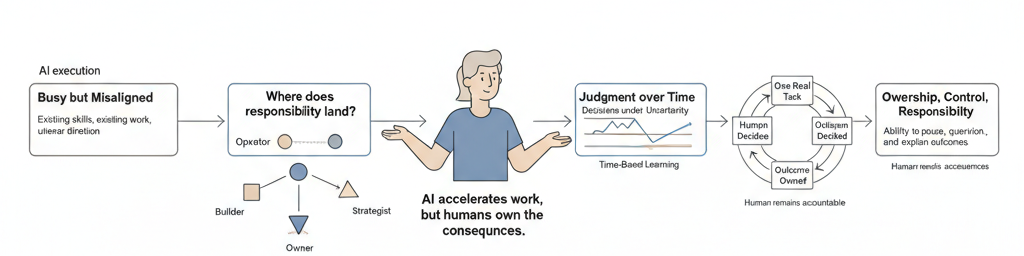

Most AI career advice assumes something quietly but firmly: that “AI” itself is a role. It isn’t. AI is not a job title. It’s a force that attaches itself to roles that already exist and stretches them sometimes gently, sometimes until they crack. Builders, operators, strategists, advisors, translators, owners these roles existed before AI and will exist after it. What’s changed is scale and speed.

If you don’t decide which direction of responsibility you’re moving toward, AI won’t make you stronger. It will simply make you busier, louder, and more scattered. You see this misalignment everywhere. Designers learn to code and feel oddly irrelevant.

Developers master models and still feel replaceable. Writers automate everything and quietly lose the reason they cared about the work at all. The problem isn’t competence. It’s confusion about ownership.

A builder uses AI to construct systems. A strategist uses it to test and stress decisions. An operator uses it to increase throughput. An owner uses it to manage risk. Same tools, completely different outcomes. Before asking what to learn, the harder question is this: when something goes wrong, where do you want the responsibility to land? Fixing it, explaining it, or owning it. This choice isn’t permanent, but without it every new skill feels borrowed like it belongs to someone else’s future instead of yours.

The roadmap is decision-based, not time-based

Most roadmaps are calendars disguised as strategy. Three months for this. Six months for that. One year to mastery. But AI doesn’t reward time spent. It rewards decisions made while things are unclear. In 2026, progress shows up as judgment knowing when to trust an output, when to question it, when speed becomes dangerous, and when something should never be automated at all.

Two people can learn the same tools and diverge completely. One waits to feel ready. The other keeps making small decisions and living with their consequences. A real AI career roadmap measures growth differently: not by courses completed, but by decisions you can now make calmly that used to feel intimidating.

Can you look at an AI-generated plan and see where it will fail in the real world? Can you explain why a system made a bad recommendation without blaming the system? Can you slow things down when everyone else wants speed? Those decisions compound faster than any checklist ever will.

How this roadmap actually moves

If you strip this down to its practical motion, it’s not complicated just uncomfortable. First, you decide where responsibility should land. Not perfectly. Just consciously. Then you redesign one real task from your current work using AI end to end, while staying fully responsible for the result. Not a demo. Not a side project. Something that already matters.

You let AI assist. You let it miss context. You let it surprise you. And then you pay attention. Where did you trust it too much? Where did you underuse it? Which decisions were still yours no matter how good the output looked? You repeat that loop not to master tools, but to notice where judgment still carries weight. Over time, the scope expands, not because you learned more tools, but because you learned where you can safely stand. That’s the real progression.

The AI Career Roadmap 2026 must connect to your existing work and life

This is where most advice quietly collapses. It assumes a clean reset: quit, learn, rebrand, return. Real people don’t live like that. They have income to protect, reputations to maintain, families and responsibilities that don’t pause for career experiments.

Strong AI transitions don’t replace your current work. They grow out of it. A marketer doesn’t become an AI engineer. They become someone who designs systems that choose messaging and knows when to override them. A developer doesn’t stop coding.

They become someone who supervises autonomous code and owns architectural consequences. A writer doesn’t disappear. They become someone who shapes narrative judgment while machines handle volume.

If a roadmap ignores what you already do, it may feel exciting, but it won’t be sustainable. The real entry point is always the overlap between where AI already touches your work and where you remain accountable no matter what. That overlap isn’t flashy, but it’s stable.

The end state is judgment, control, and responsibility

Here’s what most roadmaps avoid saying plainly: as AI does more work, humans are blamed more when things go wrong. When a system fails, no one asks the model to explain itself. They ask the person who allowed it to run. Why wasn’t this caught earlier? Why was this trusted? Why was this automated at all?

The real end state of an AI career in 2026 isn’t tool mastery. It’s ownership understanding what the system is doing well enough to explain it, knowing its limits from experience, being comfortable stopping it, and standing present when it fails instead of hiding behind it. That’s not a beginner role. That’s a responsibility role. And that’s why judgment, not speed, becomes the rare skill.

Self-checks to avoid quiet drift

AI careers rarely fail loudly. They drift quietly. You stay busy. You keep learning. Six months later, you still feel oddly unanchored. That’s why you need diagnostic questions instead of motivational ones. Would your value survive if AI disappeared tomorrow? Can you explain your AI-assisted work without hiding behind jargon? Are you shaping decisions or just accelerating tasks? Does AI taking over execution make you calmer or more anxious? Anxiety usually points to unclear responsibility. These questions aren’t meant to feel good. They’re meant to keep you oriented.

What progress actually looks like

The transition doesn’t arrive with announcements or titles. It shows up quietly. Conversations begin to change. People stop asking how fast something can be done and start asking whether it should be done at all. An AI-generated report lands in your inbox, and you don’t read it for grammar. You read it for assumptions.

You can say which parts are safe and which are risky, and explain why using lived context, not theory. You still use the tools, maybe more than before. You’re just less impressed by them. When something breaks, the question isn’t which model failed.

It’s why the system was allowed to run without a human pause. Work doesn’t necessarily get easier. It gets steadier. You’re no longer proving you can keep up with AI. You’re showing that when AI moves fast, someone is still watching.

That’s when the roadmap stops feeling like a plan and starts feeling like a position you can stand in.

Where this leaves me

I still want cleaner answers sometimes. A checklist. A signal that I’m on track. But the longer I sit with this, the clearer it becomes: the best AI career roadmap 2026 doesn’t end in certainty. It ends in capacity the capacity to pause, to question, to intervene, and to say no.

Not because you fear AI, but because you’re responsible for what happens when it acts. The work doesn’t wrap up neatly. It doesn’t feel finished. It just feels held. And for now, that’s enough to keep going.

Releted Posts 📌

Autonomous Intelligence: AI That Does the Work While Humans Keep the Control

1 thought on “AI Career Roadmap 2026: From Learning Tools to Owning Decisions”