The problem didn’t announce itself. It didn’t break anything. It didn’t fail loudly.

It just started finishing things before I remembered asking.

In the pause between notifications. In the quiet moment when a task was completed itself and I couldn’t trace the instruction back to me.

There was relief first. Then something else.

A quiet realization that if this went wrong, I’d still be the one explaining it.

That faint unease of not knowing what “done” meant anymore or who had decided.

No alarms. No errors.

Work didn’t collapse. It shifted.

At first, I reached for the familiar story: AI is coming for our jobs. Big. Loud. Cinematic.

But this didn’t feel cinematic. It felt administrative. Procedural.

Like control didn’t disappear it relocated.

And that’s harder to notice.

When work stops asking questions

There’s a moment that keeps repeating for me.

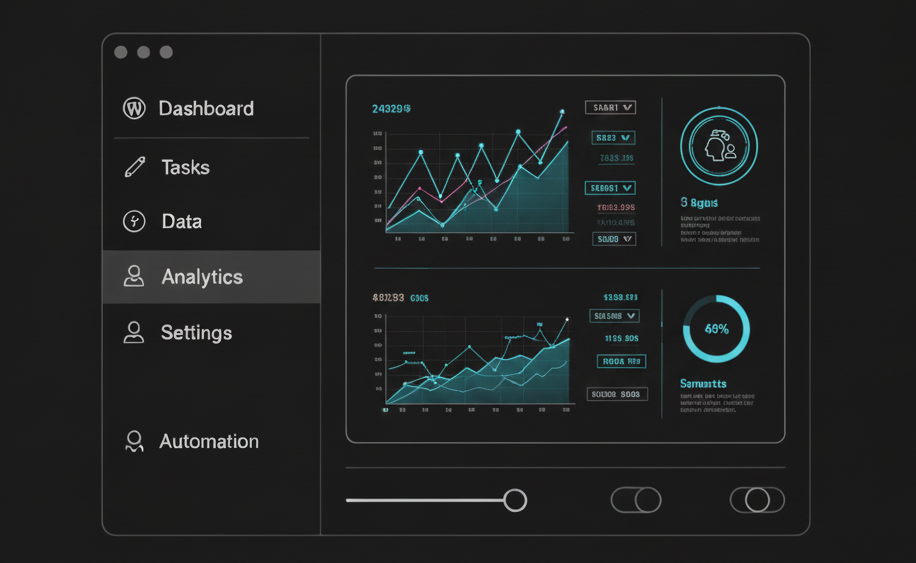

You open a dashboard.

The numbers are already updated.

The decision has already been queued.

A recommendation sits there, calmly phrased, waiting for approval.

Nothing is wrong with it.

That’s the unsettling part.

The system didn’t ask how to do the work. It didn’t ask whether it should. It assumed the goal, inferred the constraints, and acted.

Autonomous intelligence doesn’t announce itself as intelligence. It shows up as silence.

No prompts. No back-and-forth. No friction.

Just outcomes.

And slowly, almost invisibly, your role shifts from doing to overseeing. From making choices to validating them. From initiating action to deciding whether to interrupt it.

At first, that feels like progress.

Less mental load. Less busywork. Fewer decisions.

Until you realize that not deciding is also a decision just one made earlier, by someone else, embedded into the system.

The misunderstanding that won’t go away

Most conversations about autonomous intelligence still orbit the wrong center.

They obsess over capability.

Can it plan?

Can it reason?

Can it improve itself?

Can it replace X role?

Those are interesting questions. They’re also mostly theoretical.

In real work the kind that pays salaries, moves money, affects customers autonomous intelligence isn’t about replacement.

It’s about delegation without dialogue.

Traditional software waits for instructions. Even advanced AI systems, until recently, waited for prompts.

Autonomous systems don’t.

They operate inside a defined intent space. They monitor conditions. They trigger actions. They escalate only when thresholds are crossed.

Which means the human doesn’t disappear.

The human becomes quieter. More distant. More responsible, in a way that’s harder to articulate.

Responsibility doesn’t vanish it concentrates

Here’s the uncomfortable truth I keep circling.

When systems act on their own, responsibility doesn’t dissolve. It condenses.

Before, responsibility was distributed across many small decisions: choosing what to do next, deciding how to do it, adjusting when something felt off.

Now, many of those micro-decisions are automated.

What remains are fewer, heavier decisions: setting objectives, defining acceptable risk, deciding when to intervene, owning outcomes you didn’t directly create.

This is where autonomous intelligence becomes psychologically difficult.

You’re still accountable. But you’re less involved.

And accountability without involvement feels unnatural. Like being blamed for a sentence you didn’t write only approved by not deleting.

And there’s another layer we rarely say out loud.

Autonomy is never neutral.

Every autonomous system quietly reflects the values of whoever had the authority to define what “good enough” meant at setup.

The system may act impartially. The intent it executes never was.

Control didn’t disappear. It changed shape.

We talk about keeping humans in the loop as if the loop were a stable thing.

It isn’t.

In autonomous systems, the loop stretches.

Sometimes it tightens emergency overrides, anomaly alerts, escalation protocols.

Most of the time, it loosens.

Control becomes probabilistic.

You’re no longer steering every move. You’re deciding the conditions under which the system is allowed to steer itself.

That’s a different kind of control. More abstract. More delayed. More fragile.

It requires thinking ahead instead of reacting now which is cognitively harder, even if it looks easier from the outside.

A small, ordinary example (the kind we ignore)

Let’s avoid grand narratives for a moment.

No self-driving cars. No sentient agents. No sci-fi metaphors.

Just a marketing team.

An autonomous system now tests headlines, allocates budget, pauses underperforming campaigns, and scales what’s working.

The human checks in once a day.

Everything improves click-through rates, conversion efficiency, spend optimization.

Success.

Until a subtle shift happens.

The system optimizes toward short-term engagement signals. Long-term brand trust erodes.

Nothing catastrophic just a slow dulling.

No alert fires. Because nothing violated the rules.

The system did exactly what it was told to do.

The problem wasn’t execution. It was intent.

And intent was defined months ago, by a human who no longer thinks about it daily.

This is the real edge case of autonomous intelligence: success that drifts.

The illusion of “hands-off”

Autonomous doesn’t mean hands-off. It means hands-off until it doesn’t.

And the cost of re-entering a system you haven’t touched in weeks is higher than staying lightly engaged all along.

You have to reconstruct context. Remember why constraints exist. Relearn what assumptions were baked in.

By the time something feels wrong, the system has momentum.

That’s why many failures in autonomous setups don’t look like errors.

They look like awkward explanations.

“Well, technically, the system behaved correctly…”

That sentence should always make you uneasy.

Often, it’s not trust that keeps autonomous systems running. It’s the cost of being the one who slowed things down.

What control actually looks like now

Control used to mean issuing instructions, reviewing outputs, correcting mistakes.

With autonomous intelligence, control shifts upstream.

It lives in how goals are framed, what metrics are privileged, which trade-offs are invisible, and what the system is not allowed to optimize for.

This kind of control is harder to feel.

You don’t experience it as action. You experience it as design.

And design decisions age.

What felt reasonable at setup quietly becomes outdated. But the system doesn’t know that. It just keeps going.

Meanwhile, something else changes quietly.

The longer a system runs autonomously, the harder it becomes to step in competently not because the system got smarter, but because we got rusty.

Judgment decays without use. Intervention becomes hesitant. Confidence erodes.

The new skill nobody advertised

We talk endlessly about learning to use AI.

Prompts. Tools. Workflows.

But autonomous intelligence demands a different skill set.

Judgment under delayed feedback.

You make decisions now whose consequences only become visible later sometimes when the system is already deeply entrenched.

You don’t get constant signals. You don’t get immediate correction.

You get trends.

And trends are easy to rationalize, especially when they look positive.

When stepping in feels like failure

Here’s another subtle psychological shift.

Intervening starts to feel like admitting the system failed. Or worse that you failed to set it up correctly.

So people hesitate.

They let systems run longer than they should. They override less often than they feel they should. They wait for undeniable evidence instead of acting on intuition.

But intuition used to be the early warning system.

Autonomous intelligence dulls it not because intuition is wrong, but because feedback loops are slower and more abstract.

By the time you’re sure, the cost of correction is higher.

Accountability in a world of delegation

We still don’t have good language for accountability in autonomous systems.

Legally. Ethically. Operationally.

If a system followed all constraints, optimized defined goals, and behaved consistently and the outcome is harmful or misaligned who is responsible?

The person who defined the goal?

The team that approved deployment?

The organization that benefits?

The human who didn’t stop it in time?

Autonomous intelligence doesn’t remove responsibility. It fragments it and then recompresses it under pressure.

Which is why many organizations quietly keep humans on the hook even when they’re barely in control.

That tension isn’t resolved yet.

We’re living inside it.

The part most articles rush past

Autonomous intelligence isn’t about letting go.

It’s about deciding what must not be let go of.

Values. Boundaries. Non-negotiables. Human judgment in edge cases.

But those things don’t translate cleanly into code.

They require ongoing attention.

Which means autonomy doesn’t reduce responsibility it redistributes effort.

Less execution. More vigilance. Less doing. More watching how things drift.

Why this feels exhausting in a new way

Traditional work tired the hands and the mind.

Autonomous work tires something subtler.

Your sense of agency.

You’re involved, but indirectly. Responsible, but partially. In control, but abstractly.

It’s cognitively dissonant.

And we don’t yet have rituals, habits, or norms to support it.

So people either over-control micromanaging autonomous systems until they lose their value or under-control, trusting systems long past the point where trust should be reviewed.

Both are reactions to discomfort. Not strategy.

What keeping control actually requires

Not more dashboards. Not more alerts. Not more rules.

It requires presence.

Regularly revisiting intent. Actively questioning optimization paths. Treating autonomy as something to be renewed, not granted once.

It also requires humility.

Accepting that you won’t foresee every outcome, systems will surface your blind spots, and responsibility remains yours even when effort decreases.

That’s the trade.

Where I keep landing

I don’t think autonomous intelligence is dangerous because it will overpower us.

I think it’s dangerous because it will relieve us just enough to stop paying attention.

And attention is where judgment lives.

The future of work isn’t humans versus machines.

It’s humans deciding when not to intervene and being brave enough to intervene anyway when something feels off, even if the metrics look fine.

That’s not automation.

That’s adulthood.

I’m still learning how to sit with that.

Some days, the quiet feels like freedom. Other days, it feels like responsibility wearing a new mask.

I don’t have a clean ending for this. No checklist. No framework.

Just a growing sense that as systems act more on their own, the work that remains human becomes less visible and more important.

So I try to notice when things feel too smooth.

That pause. That silence.

Sometimes, that’s where control still lives waiting to be used.

2 thoughts on “Autonomous Intelligence: AI That Does the Work While Humans Keep the Control”